Introduction

Imagine you're building a machine learning model to predict house prices based on features like the number of bedrooms, location, and square footage. You train a linear regression model, and it performs flawlessly on your training data. But when you test it on new, unseen data—it fails miserably. What happened?

This is a classic case of overfitting—your model memorized the training data instead of learning the general patterns. It's like studying only past exam questions and panicking when the actual test asks something slightly different.

Enter Ridge Regression—a smarter, more robust way to perform linear regression, especially when your model starts getting “too good to be true.”

In this beginner-friendly guide, we’ll explore ridge regression for beginners, unpack its theory, show how to use it in Python with scikit-learn, and help you understand when and why to use it.

What is Ridge Regression?

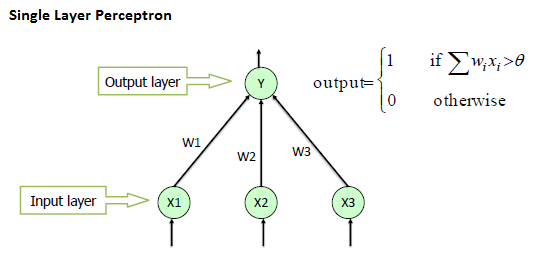

At its core, Ridge Regression is an extension of linear regression with regularization. It’s still trying to fit a line (or hyperplane in multiple dimensions) to your data, but with one key twist: it penalizes large coefficients.

In regular linear regression, the goal is to find coefficients (weights) that minimize the difference between the predicted and actual values. Ridge regression tweaks this goal by adding a penalty to the cost function—the bigger the coefficients, the higher the penalty.

Think of it like putting weights on a balloon (the coefficients). You still want the balloon to fly (fit the data), but not so wildly that it hits the ceiling (overfits the data).

Why Ridge Regression Matters

🧮 Regularization in Machine Learning

Ridge Regression uses a technique called L2 regularization. This means we add the square of the magnitude of coefficients to the loss function. Why?

Because squaring makes larger numbers even larger. So, if your model is trying to use very large coefficients to reduce prediction error, this regularization pushes back—forcing the model to find a better balance.

Here’s what regularization does in simple terms:

Reduces model complexity.

Prevents overfitting.

Improves generalization to new data.

🧠 Tackling Multicollinearity

Multicollinearity happens when your features are highly correlated with each other—making it hard for the model to determine which feature really matters.

Ridge regression shrinks the coefficients of these correlated features, helping the model avoid getting confused. The result? More stable and interpretable models.

Ridge Regression Formula Explained

Let’s break down the math in an approachable way.

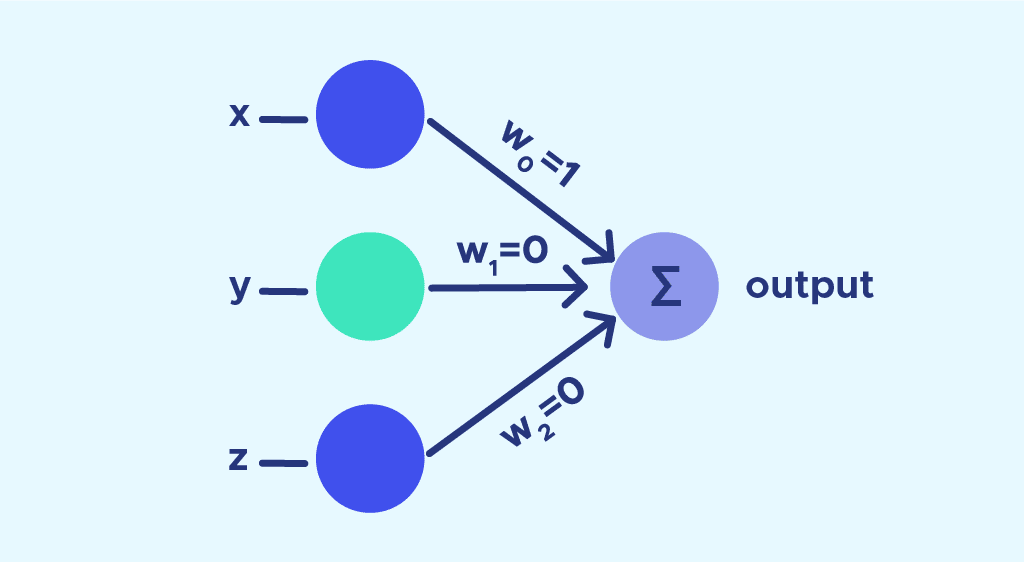

In standard linear regression, we minimize the Residual Sum of Squares (RSS):

RSS=∑(yi−y^i)2\text{RSS} = \sum (y_i - \hat{y}_i)^2RSS=∑(yi−y^i)2

In Ridge Regression, we minimize the RSS plus a penalty term:

Ridge Cost Function=∑(yi−y^i)2+λ∑wj2\text{Ridge Cost Function} = \sum (y_i - \hat{y}_i)^2 + \lambda \sum w_j^2Ridge Cost Function=∑(yi−y^i)2+λ∑wj2

Where:

yiy_iyi: actual values

y^i\hat{y}_iy^i: predicted values

wjw_jwj: model coefficients

λ\lambdaλ (or alpha): regularization strength

The term λ∑wj2\lambda \sum w_j^2λ∑wj2 is the L2 penalty.

🔁 What does lambda (α) do?

When λ = 0: Ridge becomes regular linear regression.

When λ is high: Coefficients shrink toward zero, reducing variance but possibly increasing bias.

Tuning lambda helps find that sweet spot where the model is neither too simple nor too complex.

Ridge Regression in Practice (Python Example)

Let’s get hands-on using the scikit-learn ridge regression implementation. We'll use a simple synthetic dataset for clarity.

pythonCopyEditfrom sklearn.linear_model import Ridge

from sklearn.datasets import make_regression

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

import matplotlib.pyplot as plt

# Generate synthetic data

X, y = make_regression(n_samples=100, n_features=1, noise=15, random_state=42)

# Split into training and testing

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Scale features (important for ridge)

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Train Ridge Regression model

ridge = Ridge(alpha=1.0)

ridge.fit(X_train_scaled, y_train)

# Predict

y_pred = ridge.predict(X_test_scaled)

# Plotting the results

plt.scatter(X_test, y_test, color='blue', label='Actual')

plt.scatter(X_test, y_pred, color='red', label='Predicted')

plt.title("Ridge Regression Example")

plt.xlabel("Feature")

plt.ylabel("Target")

plt.legend()

plt.show()

🧪 Key Notes:

We used

StandardScalerto scale the features—a must for ridge regression.Try changing

alphato 0.01 or 10 and observe the predictions. You'll see how regularization affects the model.

Pros and Cons

✅ Pros:

Reduces overfitting.

Handles multicollinearity.

More stable than plain linear regression.

Easy to implement with

scikit-learn.

❌ Cons:

Doesn’t perform feature selection (unlike Lasso).

Coefficients never become exactly zero.

Requires tuning of

alpha.

Comparison: Ridge vs. Lasso vs. ElasticNet

FeatureRidgeLassoElasticNetRegularization TypeL2L1L1 + L2Shrinks coefficients?YesYesYesSets coefficients to 0?❌ No✅ Yes✅ SometimesFeature selection?❌ No✅ Yes✅ YesBest for...Many small effectsFew strong predictorsA mix of both

Use Ridge when you believe all features contribute a little.

Use Lasso when you want to eliminate irrelevant features.

Use ElasticNet for the best of both worlds.

Conclusion

Ridge Regression is a powerful, beginner-friendly way to improve your linear models—especially when you're facing overfitting or multicollinearity. With just a small tweak to the cost function, Ridge helps your model become more reliable and better at generalizing.

So next time your model seems too good to be true, try adding some Ridge. It just might save your predictions!

Ready to experiment? Load up scikit-learn, tweak that alpha, and watch your model get smarter.

❓FAQs

What is Ridge Regression in machine learning?

Ridge Regression is a type of linear regression that includes L2 regularization, which helps prevent overfitting by shrinking model coefficients.

How is Ridge Regression different from linear regression?

Standard linear regression minimizes only the prediction error, while Ridge adds a penalty for large coefficients to improve generalization.

When should I use Ridge Regression?

Use Ridge when your model is overfitting or when features are highly correlated (multicollinearity).

What is the alpha (λ) parameter?

Alpha (λ) controls the strength of regularization. Higher values shrink the coefficients more aggressively.

Is Ridge better than Lasso?

It depends. Ridge is better when all features contribute to the output. Lasso is better when only a few features matter.

Can Ridge Regression be used for feature selection?

No. Ridge shrinks coefficients but doesn't set them to zero. Use Lasso or ElasticNet for feature selection.

Why do we need to scale features in Ridge Regression?

Because regularization is sensitive to the scale of features. Without scaling, features with larger ranges will dominate the penalty.

Write a comment ...